Only released in EOL distros:

Package Summary

A package that allows a remote user to request and assist the detection, recognition and pose estimation of tabletop objects, primarily using an rviz display.

- Author: David Gossow

- License: BSD

- Repository: wg-ros-pkg

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_tabletop_manipulation_apps/tags/pr2_tabletop_manipulation_apps-0.4.5

Package Summary

A package that allows a remote user to request and assist the detection, recognition and pose estimation of tabletop objects, primarily using an rviz display.

- Author: David Gossow

- License: BSD

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_object_manipulation/branches/0.5-branch

pr2_object_manipulation: active_realtime_segmentation | fast_plane_detection | manipulation_worlds | object_recognition_gui | object_segmentation_gui | pick_and_place_demo_app | pr2_create_object_model | pr2_grasp_adjust | pr2_gripper_grasp_controller | pr2_gripper_grasp_planner_cluster | pr2_gripper_reactive_approach | pr2_gripper_sensor_action | pr2_gripper_sensor_controller | pr2_gripper_sensor_msgs | pr2_handy_tools | pr2_interactive_gripper_pose_action | pr2_interactive_manipulation | pr2_interactive_object_detection | pr2_manipulation_controllers | pr2_marker_control | pr2_navigation_controllers | pr2_object_manipulation_launch | pr2_object_manipulation_msgs | pr2_pick_and_place_demos | pr2_pick_and_place_tutorial | pr2_tabletop_manipulation_launch | pr2_wrappers | rgbd_assembler | robot_self_filter_color | segmented_clutter_grasp_planner | tabletop_collision_map_processing | tabletop_object_detector | tf_throttle

Package Summary

A package that allows a remote user to request and assist the detection, recognition and pose estimation of tabletop objects, primarily using an rviz display.

- Author: David Gossow

- License: BSD

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_object_manipulation/branches/0.6-branch

Package Summary

Backend for the interactive object recognition rviz plugin.

- Author: David Gossow

- License: BSD

- Source: git https://github.com/ros-interactive-manipulation/pr2_object_manipulation.git (branch: groovy-devel)

Contents

Overview

The PR2 interactive object detection tool contains a graphical user interface which allows a remote user to run scene segmentation and object recognition on a PR2 robot. Recognition can be executed in a fully autonomous or interactive way, utilizing the functionality of object_recognition_gui. The results of these steps can be used by pr2_interactive_manipulation to pick up up the segmented or recognized objects.

Starting the PR2 Interactive Object Recognition Tool

There are two options to run the user interface. One is to bring up pr2_interactive_manipulation, which will load all the components described here.

The other is to run the object detection part only, for which you will have to do the following:

On the robot

Start the following launch file:

roslaunch pr2_interactive_object_detection pr2_interactive_object_detection_robot.launch

Or, if you have a local Kinect:

roslaunch openni_camera openni_node.launch roslaunch rgbd_assembler rgbd_kinect_assembler.launch roslaunch household_objects_database objects_database_remote_server.launch rosrun willow_tod recognition_node camera:=/camera/rgb points:=/camera/rgb/points -C detect_config.txt

Then start rviz and add "Interactive Object Detection", "Object Recognition popup window"

On the desktop

Start the following launch file:

roslaunch pr2_interactive_object_detection pr2_interactive_object_detection_desktop.launch

Note that this launch file will bring up rviz. The tool is implemented as an rviz plugin. For everything to work, you will have to add the following displays:

Interactive Object Detection/Interactive Object Detection: This brings up a control dialog with buttons to trigger segmentation, object recognition and to make the robot's head look at a spot directly in front of the robot.

Object Recognition GUI/Object Recognition Pop-Up Window: This will create a pop-up window once interactive object recognition has been requested. It does not have to run in the same rviz instance as the control dialog.

Note that the control dialog does not contain any actual functionality but redirects all user input to an additional node called pr2_interactive_object_detection_backend. This allows the front end to be replaced by other user interfaces.

Using the PR2 Interactive Manipulation Tool

Control Dialog

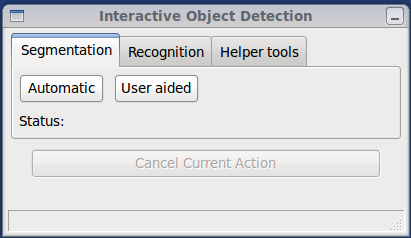

Dialog segmentation tab

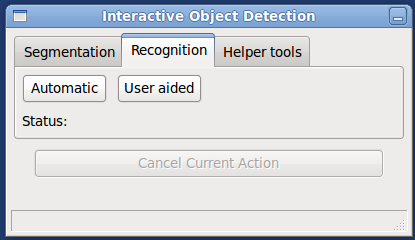

Dialog recognition tab

The Interactive Object Detection control dialog contains buttons to trigger segmentation and recognition. All actions can be canceled while they are being executed. In addition, it displays the current status of the actions in the status bar as well as information about the results of segmentation and recognition. Before performing the recognition step, it is necessary to run the segmentation step.

The results of each step will immediately become available in other tools like pr2_interactive_manipulation.

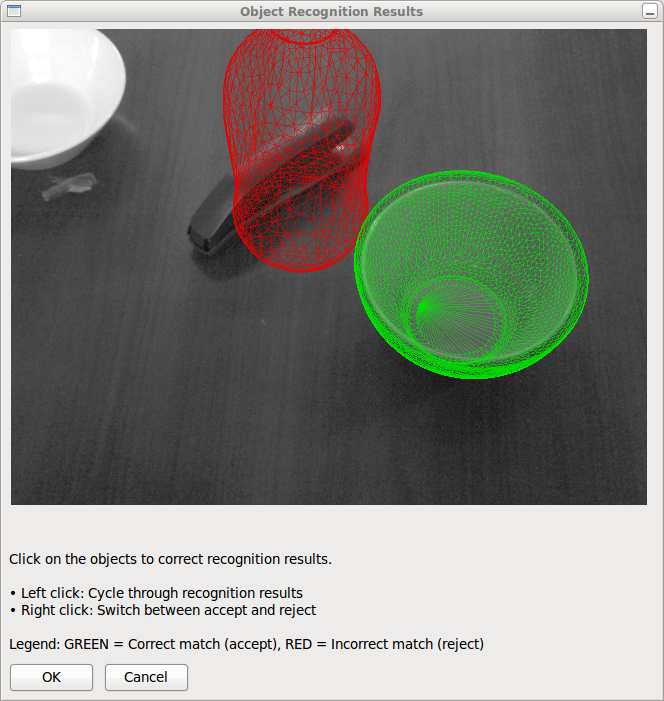

Interactive Object Recognition

The Interactive Object Recognition Dialog will automatically pop up once it has been requested. It shows the scene as viewed by the robot's camera with the meshes of all recognized objects being overlaid on top of the camera image. When moving the mouse over this window, you will see individual meshes being highlighted as you go over them.

Accept or reject matches: If a mesh is displayed in red color, this means that it is considered a wrong recognition result and is going to be omitted, while green indicates a correct recognition result. You can switch between the two states by right-clicking on the object.

Cycle through results: For each segment, there will be a number of possibly matching objects. To cycle through these matches, left-click on the corresponding mesh.