Only released in EOL distros:

Package Summary

This package provides the pipeline to calibrate the relative 6D poses between multiple camera's. The calibration can be preformed online, and the results are published to tf in realtime.

- Author: Vijay Pradeep, Wim Meeussen

- License: BSD

- Repository: wg-kforge

- Source: hg https://kforge.ros.org/calibration/camera_pose

Package Summary

This package provides the pipeline to calibrate the relative 6D poses between multiple camera's. The calibration can be preformed online, and the results are published to tf in realtime.

- Author: Vijay Pradeep, Wim Meeussen

- License: BSD

- Source: hg https://kforge.ros.org/calibration/camera_pose (branch: default)

Package Summary

This package provides the pipeline to calibrate the relative 6D poses between multiple camera's. The calibration can be preformed online, and the results are published to tf in realtime.

- Author: Vijay Pradeep, Wim Meeussen

- License: BSD

- Source: hg https://kforge.ros.org/calibration/camera_pose (branch: default)

Package Summary

This package provides the pipeline to calibrate the relative 6D poses between multiple camera's. The calibration can be preformed online, and the results are published to tf in realtime.

- Author: Vijay Pradeep, Wim Meeussen

- License: BSD

- Source: hg https://kforge.ros.org/calibration/camera_pose (branch: default)

Package Summary

Camera pose calibration using the OpenCV asymmetric circles pattern.

- Maintainer status: maintained

- Maintainer: Ronald Ensing <r.m.ensing AT delftrobotics DOT com>

- Author: Hans Gaiser <j.c.gaiser AT delftrobotics DOT com>, Jeff van Egmond <j.a.vanegmond AT tudelft DOT nl>, Maarten de Vries <maarten AT de-vri DOT es>, Mihai Morariu <m.a.morariu AT delftrobotics DOT com>, Ronald Ensing <r.m.ensing AT delftrobotics DOT com>

- License: Apache 2.0

- Source: git https://github.com/delftrobotics/camera_pose_calibration.git (branch: indigo)

This is a new package. This package is unrelated to the camera_pose_calibration package which was supported up to and including Groovy.

More information can be found in the readme at https://github.com/delftrobotics/camera_pose_calibration

New in Diamondback

Contents

![]() camera_pose has moved to github: https://github.com/ros-perception/camera_pose

camera_pose has moved to github: https://github.com/ros-perception/camera_pose

Stability

Note that camera_pose_calibration is part of a <1.0 stack, and its APIs are subject to changes. We are actively using this stack in-house at Willow Garage with good results, and we're actively making improvements along the way.

We would definitely love to hear about any issues you encounter (via answers.ros.org) or any other feedback you may have during the development process. Also check out the troubleshooting page.

Overview

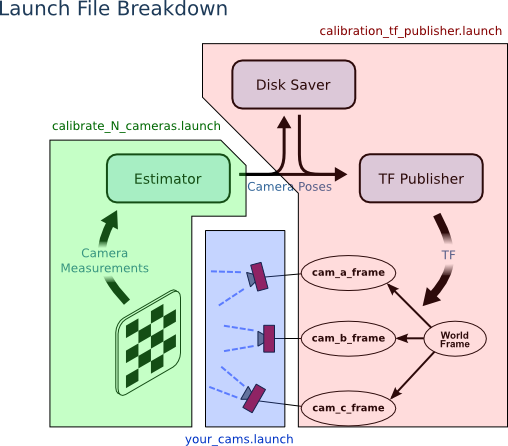

|

|

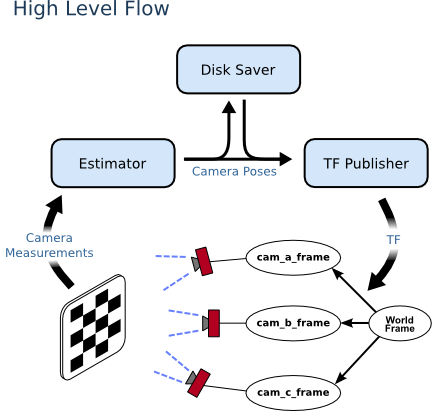

The camera_pose_calibration package allows you to calibrate the relative 6D poses between multiple cameras. It publishes the results to tf, making it easy to calibrate an existing camera setup, even while it is running. The calibration results are stored to disk, and automatically reloaded when you restart your camera launch files.

High Level Operation

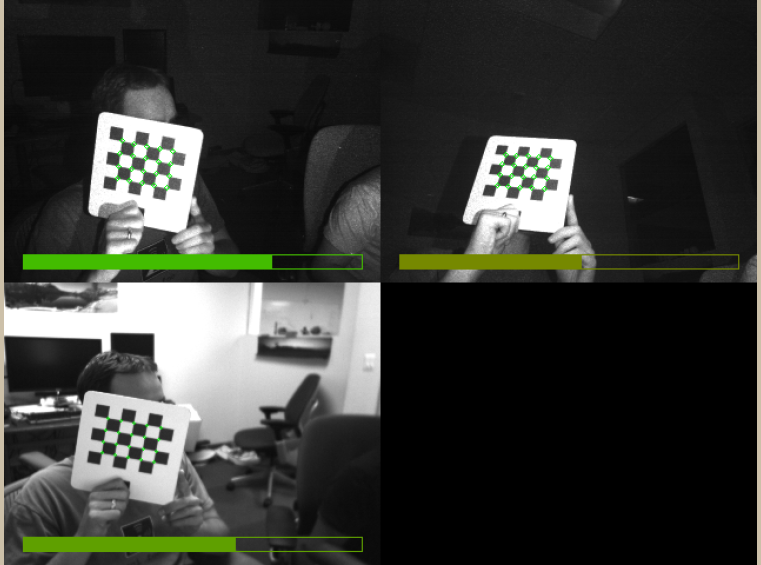

- User holds a checkerboard in view of all the cameras

- Data capture code detects checkerboard corners and passes these measurements into the estimator

- The estimator publishes the relative poses of the cameras

- The TF Publisher publishes these poses as tf transforms.

Once a estimator publishes a calibration, the disk saver stores the calibration to disk, and republishes the calibration data on system startup. Using the calibration after only a single view of the checkerboard should provide reasonable results, but it is advised to capture at least 2 or 3 checkerboard views.

Requirements

- All cameras must be rigidly attached to each other

- All cameras must be intrinsically calibrated

For most cameras see camera_calibration

For kinects see openni_camera/calibration

All cameras must publish rectified images as well as camera info (see image_proc)

Each rectified image must be available on [camera_ns]/image_rect

Each camera info must be available on [camera_ns]/camera_info

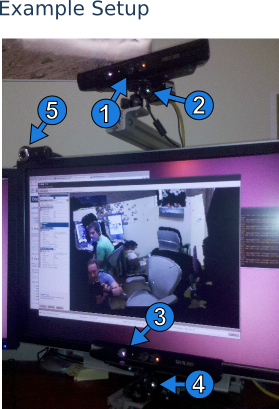

Above is an example setup that could be used with camera_pose_calibration. It consists of multiple XBox Kinects (1 & 3), multiple Prosilica GC2450 cameras (2 & 4), and a Logitech webcam (5). The calibration can work with the Kinect RGB image or the Kinect IR image (not both at the same time though, the Kinect driver is unable to stream RGB and IR simultaneously). To use the Kinect IR image, see the Kinect IR calibration page. Note that while the calibration can work with the Kinect IR image, it does not work directly on the Kinect 3D pointcloud.

Detailed Description

For a full discussion of all the various launch files and nodes "under the hood", please see the Detailed Description.

Getting started

Installation

The installation instructions assume you already installed ROS on Ubuntu.

To install the camera_pose_calibration package and all its dependencies, run:

sudo apt-get install ros-electric-camera-pose

or

sudo apt-get install ros-fuerte-camera-pose

Make sure you add /opt/ros/diamondback or /opt/ros/electric to you ROS_PACKAGE_PATH.

Source-only installations are currently quite difficult because of the large number of dependencies. This will become easier in future releases.

Setting up your launch files

To use the camera pose calibration, you need to include on of the calibration_tf_publisher.launch file in your own launch file:

This launch file will listen to the output of the calibration optimization routine, and publish the result to tf. The result of the calibration will also be saved to disk (/tmp/megacal_cache.bag) so the last calibration results get automatically loaded when you restart the cameras. Initially, when the camera's are still uncalibrated, nothing will get published to tf. Only once the first checkerboard has been captured, the tf publishing will start.

Running calibration

Before running the calibration, start your own launch files that start up the cameras. Make sure this launch file includes the calibration tf publisher as described above. While calibrating, it is okay to keep nodes that are not related to the calibration running. This makes it possible to do 'online' calibration.

The camera pose calibration package has a few sample launch files, for 2, 3 or 4 camera's.

Launching with arguments could look like this:

To make these launch files work for your own camera setup, you need to specify several arguments to the launch files:

camera[n]_ns: the namespace of the [n]th camera. In this namespace, the calibration expects to find the image topics camera_info and image_rect for the [n]th camera. You should repeat this parameter for all your camera's.

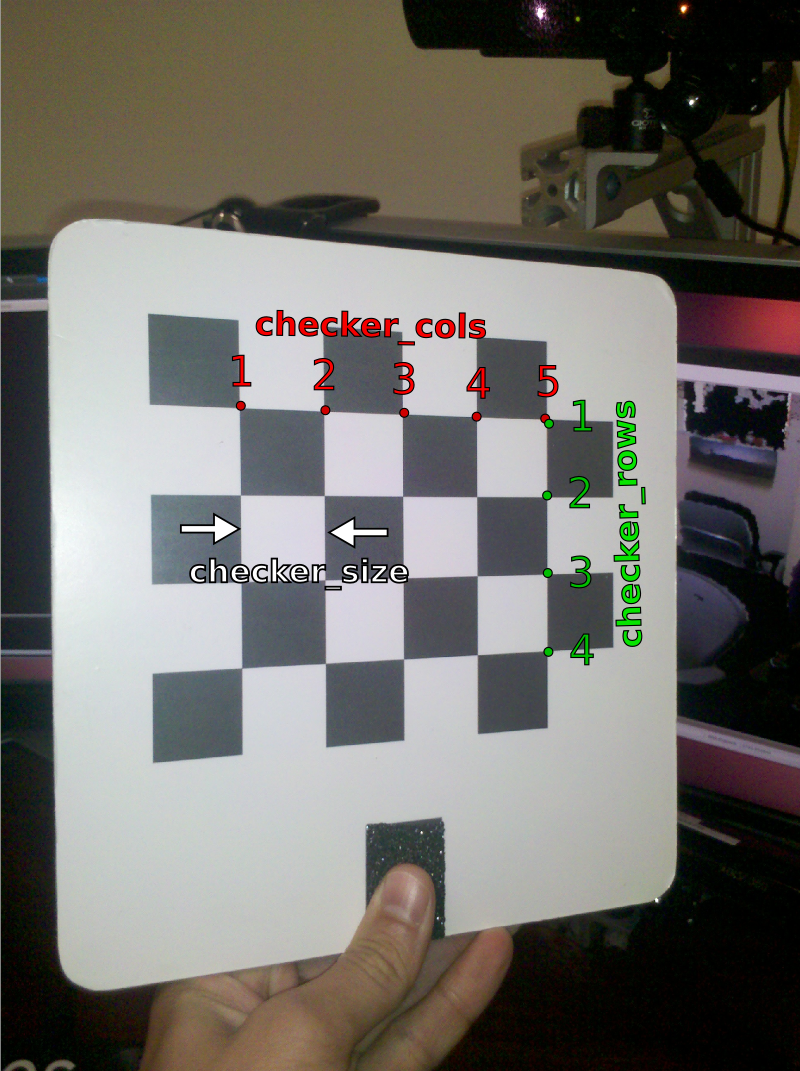

checker_rows: the number of checker corners horizontally.

checker_cols: the number of checker corners vertically.

checker_size: the size of one checker (in meters).

Once the calibration launch file is running (and shows no errors), you can start capturing checkerboard poses. The calibration viewer helps you run and debug capturing checkerboard poses.

Some basic checks:

- You see images for every camera in your system

- The entire checkerboard is visible in all cameras simultaneously

- The checkerboard corners are detected in each camera (shown by green circles on all corners)

The bar below each image shows how long the checkerboard has been stationary in that image. Once all the bars are green, you'll hear a 'beep' sound, indicating that a calibration sample was captured.

After every checkerboard capture, the non-linear optimizer will compute the relative camera poses and publish the results to tf. You can capture as many checkerboard poses as you want.